By Monica E. Oss, Chief Executive Officer, OPEN MINDS

Artificial intelligence and ChatGPT have captured the popular press attention. From writing resumes to planning travel adventures to completing law school applications, people are wild about the potential uses of artificial intelligence (AI) to make their life easier.

There is just as much coverage of the promise of ChatGPT in health and human services. Getting AI-infused life coaching and mental health counseling has been with us for a while but the emergence of ChatGPT is taking it to a new level. There is AI as counselor and coach. And AI in the therapeutic process—Does Artificial Intelligence Belong In Therapy? and ChatGPT Changes Its Mind: Maybe Antidepressants Do More Harm Than Good.

I’ve always thought that AI has great potential for diagnostics of all types of physical and mental conditions. It was almost a decade ago that I sat in on a presentation of the impressive results of using AI to review structured mental health interviews and make diagnoses. And more broadly, AI has many diagnostic applications across the mental health field. There are similar examples for every condition like ChatGPT Accurately Selects Breast Imaging Tests, Aids Decision-Making and Pathologists Look Beyond Microscope To A New Diagnostic Tool: AI.

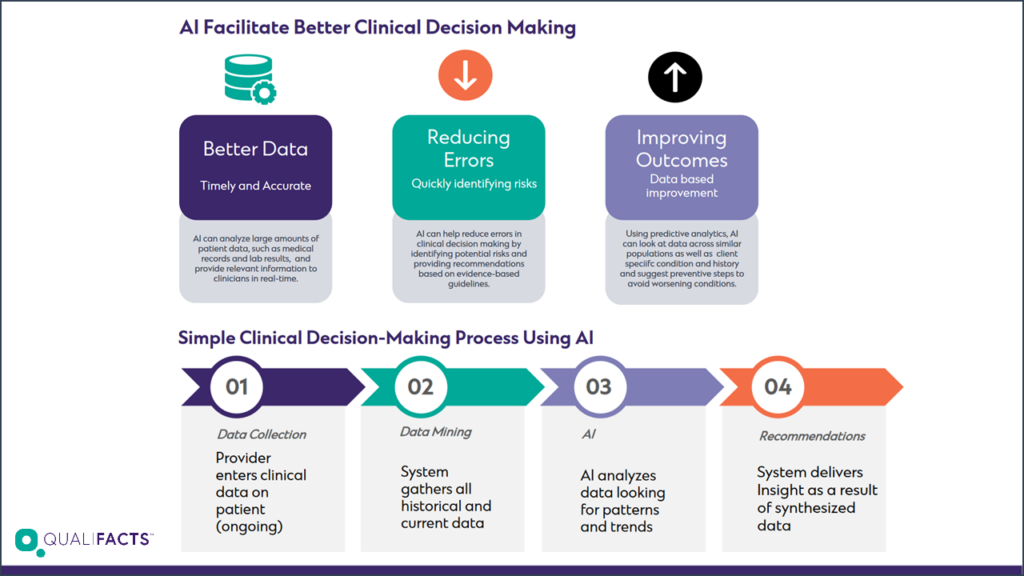

But to me, the big questions are how and how much AI and its many derivatives should be used in clinical decision support. A new article in the BMC Medical Ethics poses the question, should AI be used to support clinical ethical decisionmaking. As we move to more value-based reimbursement with downside financial risk and try to elevate health care professionals to practice at the ‘very top’ of their license, who and how decisions are made about who gets what interventions—and for what—loom large.

On one hand, using computing power to ‘digest’ the growing volume of medical literature and knowledge is an advantage. No human health care professional can keep up with it all. But any decisionmaking algorithm is captive to the research, the assumptions, and previous datasets that are part of its universe.

Ideally, the ‘input’ in every clinical decisionmaking tool should be transparent. And any enhanced clinical decisionmaking support tools should be created in a way that they can be used as part of consumer-directed care, increasing the information available to consumers to make more informed decisions about managing their health conditions.

With the amount of money going into AI investments in health care—like AI Chatbot K Health Picks Up $59M In Fresh Funding, Inks Partnership With Cedars-Sinai and Revolutionizing Medical AI Development: Flywheel Announces $54 Million In Series D Funding Led By Novalis LifeSciences And NVentures—new models will be coming soon.